From Sceptics to Power Users: 5-Step Framework for Mastering AI Adoption

Successful AI development tool adoption requires a systematic, methodical approach rather than rushing into widespread implementation. We share how to win buy-in from developers with our framework, based on findings from our internal AI Development Tools initiative.

While 97% of developers now use AI tools individually*, organizations struggle with systematic adoption that enables knowledge sharing, security compliance, and measurable improvement across teams.

Having started our structured AI adoption journey in early 2023, we've developed and refined a framework that addresses the common pitfalls organizations face.

Our AI Developer Tools (AIDT) initiative brings together engineers from different teams, and at different stages of adoption, to share tips, challenges, and time-savers, as part of our R&D efforts with Whitespectre Labs.

By encouraging collaboration and experimentation—and tracking and assessing results—we’re able to learn which tools we want to roll-out across the entire team. Experienced developers and engineers can be sceptical and change-resistant, so you also need to take the right approach to get everybody bought in to adopting any new tools.

In this article, you’ll learn how we identified our favourite AI development tool—Cursor—and how we successfully rolled that out across our whole team.

Common pitfalls for successful tool adoption

Before we get into the steps for successful adoption, it’s worth outlining some of the common pitfalls we’ve seen when trying to identify, validate, and roll-out new tools for development teams:

- Ad-hoc individual adoption without systematic approach to knowledge sharing: AI adoption doesn’t stop at getting individuals to experiment with the tools; it’s about testing a variety of tools in real-life scenarios and sharing what works (and what doesn’t). That way you can create processes to identify the best tools and roll them out across your whole team, rather than people working ad-hoc and in siloes.

- Security oversights: You need to be cautious when it comes to your team using AI without a systematic approach or any oversight because of the very real concerns around security. You need to make sure the tools everybody’s using to save time aren’t coming at a cost to you or your clients' data privacy.

- Shallow adoption vs true mastery: Without a way to share best practices, most engineers end up not knowing how to use AI development tools to their fullest extent, only auto completion features without taking full advantage of more complex tools in their workflows.

A 5-step framework for enterprise-wide AI tool adoption

To successfully roll out a new AI development tool you need to follow these five steps:

- Make sure it’s the right change (and prove it)

- Get a core group of impacted people on board

- Bring in the wider group, explain the why, the how and have others who have experienced it demonstrate benefits

- Provide channels for peer-to-peer feedback, support and coaching

- Track adoption and impact

Here’s how:

1. Make sure it's the right change (and prove it)

The first step to successful AI tool adoption is to validate that your chosen tools are the right ones.

Developers and engineers can be justifiably sceptical about changes to their established workflows. The last thing you want to do is roll out the wrong tool and then replace it with something else a few months later—that’s a great way to squander good will and erode buy in.

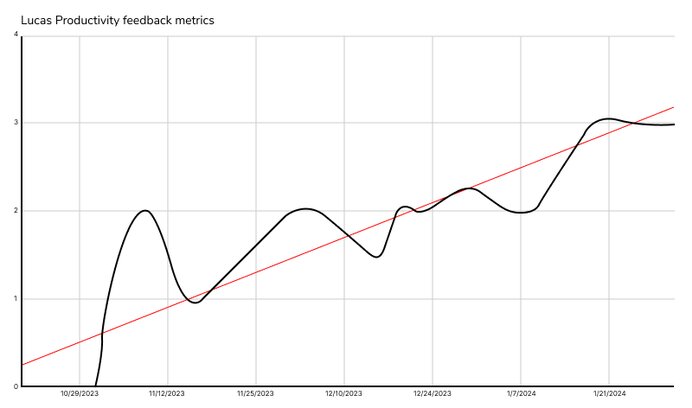

Starting with a few experienced personnel, the core members of our AIDT team validated the usefulness of Cursor alongside other tools over many months and measured the quantifiable impact on quick coding tasks, as well as getting up to speed with new projects and coding languages. By the end, we had the data to back up our choice.

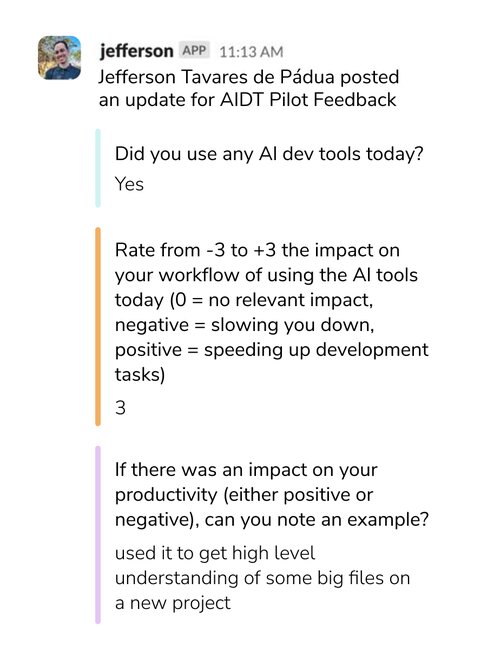

For our pilot group, we started with a daily survey sent over Slack which asked team members:

- What AI tools did you use today?

- On a scale of -3 to +3, how much time did it save you?

- And can you tell us about your experience?

These quick check-ins were key to understanding how AI worked in real workflows and day-to-day activities, to help the team work out where they were losing time on the tools and where it was being gained.

To support adoption, we had the group meet once a week to share tips, tricks, and observations. This surfaced both benefits, like quickly identifying code areas impacted by a feature, and drawbacks, like the need for precise prompts to avoid errant results.

The survey showed that even skeptics progressed along the adoption curve and came to see the value of the tools for certain tasks and, just as importantly, discovered where using them would be a potential timesink (where fixing the AI code would take longer than writing the code from scratch).

Crucially, this first phase also ensured there wasn’t any data security risk for our clients with Cursor, as it only saves telemetry and data usage, and doesn't store code.

2. Get a core group of impacted people on board

Once we’d established the usefulness of Cursor with a few key AIDT project members, the next phase involved bringing together a larger group of senior engineers to test the tool more widely in real-life scenarios and further validate it before a complete roll out.

The people selected came with varying levels of buy-in and experience with AI, and were well-placed to share best practices—what worked and what didn’t. Through knowledge sharing via regular meetings and a dedicated Slack channel, we reached a point where everybody on that team was an internal evangelist for Cursor.

We continued the regular check ins and daily surveys that were part of the previous step, only now with a larger group. Across weekly meetings, they quickly uncovered the strengths and weaknesses of specific tools. This helped us understand where they might save keystrokes and create new code faster—as well as which areas showed little to no time gained (or additional frustration!).

90% of our engineers saw a significant increase in productivity within 2 weeks of using AI-driven developer tools. For the last two years, we’ve been doing our own experimentation, piloting various AI tools to keep raising the bar on our work.

3. Bring in the wider group, explain the why, the how and have others who have experienced it demonstrate benefits

Now that we had the data to back up our choice and the confidence we’d selected the right tool, we could begin rolling Cursor out for the whole dev team. To ease the transition, we positioned Cursor as a familiar tool—like VS Code, used by the entire team—but enhanced with AI features.

It’s important any change like this isn’t just top down. Rolling out Cursor, the first thing we did was have a call with the whole dev team where a senior dev from the AIDT project demoed the tool and used real-life examples (from client-facing work) to demonstrate value. It’s important to get somebody credible—experienced and senior—to lead this call.

They were able to show how they use the Cursor day-to-day and what tangible benefits the rest of the team could expect, while also setting realistic expectations for the thought and skill needed to extract the most value from the tool.

This involved a live example where the senior dev showed how Cursor could be deployed to make changes to a legacy codebase, integrate those changes within a system you don't have knowledge of, and then work with technology that you're not proficient in. This demonstration proved powerful—based firmly in a real-life, day-to-day use case.

4. Provide channels for peer-to-peer feedback, support and coaching—and track adoption and impact

After the roll-out call/demo, we established a dedicated Slack channel to foster ongoing dialogue and so our AIDT veterans can help the wider team on their journey to proficiency.

It’s important for everyone to share experience in an ongoing forum, not just your most experienced people. It’s also best practice to keep momentum on regular knowledge sharing sessions.

You can’t just throw something out there and assume you’re “done” - you have to measure and manage it. So we’re tracking usage of the tools, identifying patterns against projects and use cases - and relaying those back into the organization to make everyone successful.

5. Help adopters take usage to the next level

It’s a common trap for companies to think they've successfully adopted a new tool when 90% of their team is using it, but that's actually when the real work begins.

When it comes to adopting something like Cursor, there are 2 levels of proficiency:

Level 1: The Quick Wins

- Developers begin with basic autocomplete functionality

- Tab completion for classes, methods, and common patterns

- Immediate productivity boost through faster coding, but only single file changes

Level 2: Advanced Techniques

- Multi file changes

- Using “compose” i.e. engaging with the AI as a collaborative “junior” developer for simple tasks or bootstrapping new projects

- Using chat functionality to get insights on the codebase or as a thought partner for new features and enhancements

- The key challenge for engineering leaders: How do you encourage teams to progress from Level 1 to Level 2?

The key challenge for engineering leaders: How do you encourage teams to progress from Level 1 to Level 2?

What's working for us:

- Tracking usage patterns to identify where developers are in their adoption journey

- Organizing working groups where power users share their experiences

- Creating dedicated sessions for knowledge sharing and capability building

But it doesn’t stop there, especially with AI tools advancing so quickly. You need to keep an eye out for new tools and developments to ensure your team has the best support and your company isn’t left behind.

Right now Cursor is working for us but we won’t be resting on our laurels. This is an ongoing process.

Conclusion

Successfully adopting AI development tools requires a systematic, measured approach rather than rushing into widespread implementation. Through our careful testing and phased rollout of Cursor across the development team, we demonstrated that methodical validation, peer-led demonstration, and ongoing support are crucial elements for gaining genuine buy-in from developers.

The five-step process we’ve outlined—validating the tool's effectiveness, building a core group of advocates, explaining benefits through peer demonstration, providing continuous support channels, and tracking adoption metrics—provides a framework that other organizations can follow. This approach not only ensures the selected tools truly enhance productivity but also addresses common concerns around security and workflow disruption while building lasting adoption among traditionally skeptical development teams.

You need to think carefully and introduce tools that don't cause friction or are easy to migrate to. We were already familiar with VS code which is very similar to Cursor, so it wasn't too hard for the team to adopt. If we’d picked a completely different tool it would have been way harder to make the change and win buy-in from seasoned devs.

Most importantly, by bringing team members into the evaluation and decision-making processes, you ensure the team wants and owns the change.