Behind the Product: How Tech Matters is Bringing Silicon Valley to Social Good

Jim Fruchterman, CEO and Founder at Tech Matters, explains why nonprofits shouldn't chase the latest tech trends—and how to make technology actually work for social impact.

You might expect a former Silicon Valley AI pioneer who founded one of the valley's early machine learning companies to be championing the latest cutting-edge technology. Instead, Jim Fruchterman, CEO and Founder of Tech Matters, spends much of his time talking nonprofits out of building apps they don't need or chasing AI solutions that won't serve them.

It's this practical approach—honed over three decades of bringing technology to the social sector—that’s made him one of the most trusted voices in tech for good. Through Tech Matters' flagship projects Terraso and Aselo, he's proving that sometimes the most impactful solutions aren't the shiniest or newest, but the ones that actually work for the people who need them most.

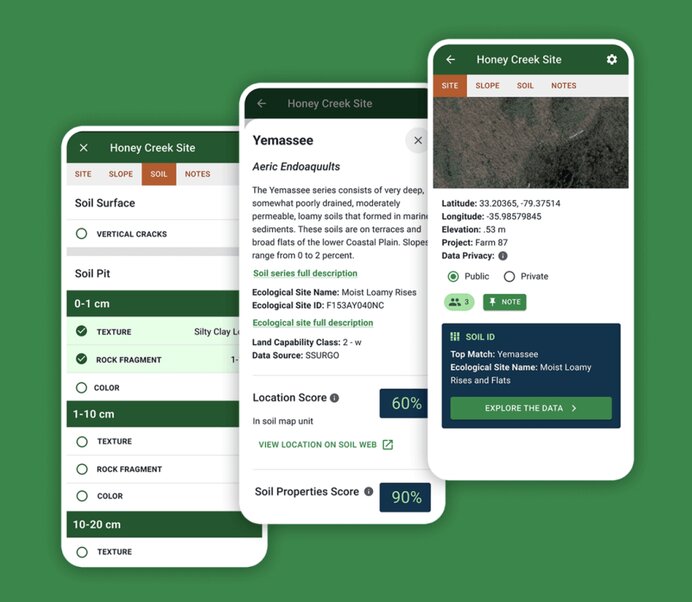

Just recently, we partnered with Terraso through our Green Grants program to help bring offline functionality to their Land Potential Knowledge System App, enabling farmers in remote areas to access crucial soil data without internet connectivity—exactly the kind of practical solution Fruchterman champions.

From providing soil analysis tools to farmers in developing countries to modernizing child helplines across 15 nations, Jim sat down with us to share his insights on how tech companies can make a real difference in the social sector, why 95% of nonprofit apps fail, and his ambitious new initiative to revolutionize how organizations handle data ethically.

If you’re a nonprofit interested in partnering with Whitespectre as part of our Green Grants, please get in touch—and let us know how we can help

We get into:

- How tech companies can get involved with more pro bono work

- How to approach climate tech

- How tech companies can make the biggest real-world impact

- How AI can make a big impact for nonprofits

- The ethics of using AI for social good

- The high failure rate of non-profit apps

- Getting end user adoption

- Data colonialism and the Better Deal for Data

We started by asking how we could further our existing pro bono work at Whitespectre:

What’s the best way for tech companies like ours—who want to be able to make a social impact, but have constraints of still having a business that works—to get more involved in pro bono work?

Jim: Well, the great news is there are now hundreds of non-profit organizations with tech teams. For obvious reasons, right? Just because software is behind everything these days. More and more, people who are ambitious about social change are realizing, “if I'm going to try to change the world for a million people, software and data just might be involved somewhere.”

And yet, these teams are generally tiny. They tend to be generalists. So that means they care a lot about the mission, but if you've got a three-person team it can be difficult to get the best from technology.

So we have two kinds of models that generally work for tech companies to collaborate with nonprofits.

The first model is when a nonprofit would like to talk to experts in their field about something they don't know about. The thinking is “if we talked to someone who really knew a lot about that, they would have saved us a month of developer time from two or three hours of conversation.”

If you find someone who can say: “No, you don't want to use that library. That's terrible.” Or “have you thought about this? You haven’t? Well, maybe you should take a step back and think about that first because you shouldn't actually build this until you’ve really decided.”

I had an early project, which is one of the first crypto-enabled software packages for human rights defenders, and being able to tap the crypto security field for expert advice was essential because we had just one developer working on this app. They said “using crypto to help human rights defenders is one of the coolest things you could be using crypto for, and we would love to advise you.”

And, of course, in a lot of cases what you need is to be told which technology infrastructure to use because a lot of modern technology is about picking the right infrastructure and your code is the 2% on top. So that's the first model.

The second model is, where you have a longer term engagement with tech companies who can help you with specific things you’re working on. For example, Okta: we're not going to reinvent multi-factor authentication for our sensitive information apps. So we work with Okta because they're part of the Pledge 1% crowd.

A Pledge 1% company can come either with a grant and/or with access to their products and team for a set period.

And of course, we're big fans of Tech To The Rescue, which also organizes these pro bono projects and I know you’ve had great experiences with them.

I think there's a lot of opportunity here that’s consistent with the business imperatives, which is exciting and interesting.

It's often one of the cooler applications of specific technology as developers working on climate change, working on kids rights, working on helping people grow more food. Usually our tech applications, I'm speaking for the Tech for Good field, are very visceral and understandable. It’s obvious why this matters.

And your average tech person would love to use their skill set to do something that's socially important, yet probably isn't willing to take a 50 or 70% pay cut!

So this is a great way where they can actually make a big difference. And, help the people who’ve made that kind of sacrifice to get on a better path because they've gotten expert advice or they got a project done that they couldn't have done themselves but that they can build on and maintain.

How do you find for-profit companies that are interested in doing that?

Well, I think the first thing is to look at the companies behind your infrastructure when you're deciding on a new product—those become your first targets. For example, our software for national child help lines, which operates in 15 countries through our Aselo, is built on top of Twilio Flex, Amazon Web Services, and Okta. Each of these companies has provided us with grants, free products, and technical assistance.

That's your inner circle—the companies whose products you're already using. Like when we needed help with PagerDuty for our operations monitoring, they not only gave us a grant but also provided staff support.

There are also organized volunteer programs like Tech To The Rescue, which started in Poland. They help connect nonprofits with volunteers based on specific needs—if you need someone who knows React, they'll find that person. Many major companies, especially big banks and insurers, have their own tech volunteer programs.

Word of mouth in the field is crucial. Your peers in other nonprofits can tell you things like, "JP Morgan Chase did a great job on this specific project that matched their team's expertise," or warn you about companies that weren't helpful and wasted a lot of time. It's about finding the right fit.

So it's never too early for nonprofits to reach out to tech companies and start collaboration in order to avoid taking the wrong path...

Exactly. In the tech industry, you learn what works and doesn't work through various channels—your own experiences with failure, seeing clients struggle, or reading industry publications about why 95% of startups in a particular space failed. You add all of that to your mental model.

The nonprofit sector doesn't have that same knowledge base. Tech people can help bridge this gap, but we often run into tech boosterism—overly optimistic promotion that drowns out practical voices. We're seeing this with AI right now. Some AI companies, driven by financial interests, are drastically overstating their current capabilities. They're betting on future developments and working to reduce costs and energy usage.

Will it work? I don't know, but I'm glad for-profit companies are spending billions figuring it out, but I don't want nonprofits wasting resources trying to be at that leading edge when even larger companies are struggling. Let's wait for them to figure it out, and then we'll implement the proven solutions three to five years later.

There’s obviously a disparity in how much bandwidth a smaller business can donate versus a much larger enterprise. How can we do more to encourage others—our competitors and our peers in the field—to do the same?

I tried a start-up 15 years ago called Code Alliance where we’d take tech company volunteers and have them work on open source projects, and it didn't work. It was hard. Google obviously has a big volunteerism program, but even though they're big, it was often a challenge to get people to actually show up for volunteerism gigs. So it's often hard to do.

The thing I like about Tech To The Rescue is we're at the point where more and more nonprofits have a tech team so that the volunteerism actually matters over time. And their target for volunteerism is actually companies of your size.

If it turns out your team is mega-crunched and they have no bandwidth, you're not going to do a volunteerism project in the next quarter because you've got some deadlines, right? But if you look and say “we're a little soft in the summer and we got a couple of people at loose ends and we'd like them to use their skills and pump them up and we're willing to continue paying them because we have a big contract coming up in September” then it’s actually a really good business case for maintaining your important human assets to give them something exciting to do rather than having them twiddle their thumbs. And they get to feel great about it.

We’ve been working with Tech Matters on your Terraso app, as part of our pro bono Green Grants initiative. But speaking generally, how should a company like ours look to get involved when it comes to climate tech?

The climate space is really difficult. Climate finance and hedge funds have tech people—or they can hire tech people directly or get an agency to do some work for them.There's plenty of opportunities to do climate work for people who have money because our capitalist system works pretty well for that. There's so interesting stuff going on in the supply chain and by and large it’s fundable in a capitalist way, with customers.

But we're interested in the harder part—the other 90%. Your local farmer in rural Asia, Africa, or Latin America isn't an attractive target for profit. That's where our model with Terraso and our suite of tools comes in. We're focused on the person on the front lines, or maybe the person managing the co-op, or the local NGO, or the land use planner in that county, province, or district.

If those are your targets, by and large, they’re not investible but they have a lot of the same needs as the equivalent person in a wealthy country. In rich countries, people use tools like Esri’s ArcGIS for mapping. But you can’t use that in most of the world. A lot of companies would use Salesforce for business management but you can't use that in rural Zambia—I’m sorry but it's just a bad idea. So that's the gap we're excited about filling as a nonprofit.

I'm not interested in competing with for-profit companies in viable markets—they're generally better at that than nonprofits. But when companies can't make enough money, or when local companies lack sophistication, that's where we come in. Even though we're a charity, most of the work on the ground is about people making money. Farmers want to increase their profits despite less rain and more heat on average. We're trying to give them the missing tools and infrastructure.

We're not alone—there are dozens of nonprofits like us tackling different parts of this problem. Many of us are open source, which encourages collaboration. For example, in Terraso, we needed data collection capabilities. The standard tech for Android data collection is Open Data Kit (ODK), and over the last 15 years, great tools have been built on top of that. KoboToolbox is one of the best of these—it enables you to collect data, pictures, maps, and more, all offline, which is crucial in many parts of the world. So we just built it in.

Another example is Precision Development, a terrific nonprofit—I think they're UK-based. They provide advice to five million farmers in the developing world. An Iowa farmer can buy this kind of service, but a Kenyan farmer probably can't afford it. So having an NGO provide this service, in partnership with the National Ministry of Agriculture, gives farmers the practical information they need.

From a volunteerism standpoint, these organizations often have their own small tech teams. The challenge is creating lasting impact. If you do tech work for a non-technical nonprofit, you might come back in a year and find they're not using it anymore. But if they have even one tech person, you've got someone who can maintain and build upon what you created.

There's another pattern we're seeing: Universities and scientists are incredibly enthusiastic about climate solutions, building exciting models for various approaches. But university professors and their teams aren't usually the best at developing practical, usable software. The science might be right, but how do you get that to a million farmers? That requires real product work, not just scientific work.

I see a big gap between proven research and actual implementation—getting it to the farmer or local policymaker who needs it. I think this gap will increasingly be filled by small tech-for-good nonprofits partnering with industry while getting scientific guidance. Not every professor wants to hear this, but just throwing technology at people without doing the product marketing and user experience work doesn't stick.

Are there areas where people could be making an impact on the climate crisis that may seem counter-intuitive—are there any unglamorous areas you'd identify that would yield a bigger impact if companies got into those spaces?

The dirty secret of technology is that it's usually just plumbing: Basic tools to help you get whatever you want to get done. Many of the AI tools people use today work without users even knowing they're using AI. It's just something that helps them get from point A to point B.

Making technology invisible is actually the secret to adoption. The shortest distance between me and my goal might be using technology, but I shouldn't even have to think about it—it should just work and do what I need. The more energy you put into highlighting how cool or shiny it is, or how it connects to big controversial issues, the more you miss the point.

Take climate change: As a former scientist, I don't think there's much debate about humans causing it, but I don't have to sell a farmer on that concept. I can just say, "Hey, if you change your farming method from this to that, someone will give you $10 per acre per quarter." They'll take that money without needing to buy into the larger debate.

Some of this stuff is really boring, but that's actually how people use technology in real life. They use it to track their finances, plan their next steps, and communicate with others. Look at our two big programs—Terraso for climate change and Aselo for national health lines. What's Aselo doing? It's taking telephone-oriented helplines and adding text channels. In Silicon Valley, they'd say "That's so 10 years ago!" But most helplines in the world are still taking telephone calls, while teenagers aren't making many calls anymore.

Because of this trailing edge phenomenon, we don't have to be at the leading edge or bleeding edge to be useful. We can take things that have been proven to work in the business sector and figure out how to apply these now-boring, standard tech infrastructure solutions to new contexts. That tech infrastructure and those benefits haven't reached these people yet. If we can just make their lives that much easier, we're doing what the tech industry does for a group of people who aren't currently financially exciting.

If we do our job right, in five or ten years, these people are going to be middle class and part of a viable market. I think that one of the big dreams of the nonprofit sector is how do we graduate people out of poverty so they become functional members of the market economy and can buy that smartphone? Well, the smartphone is probably the one thing that has reached almost everybody, or at least the majority—but other tech solutions haven't.

About six months or so ago, you did a talk on AI for social good. The AI space is progressing really quickly. You’ve spoken in the past about nonprofits tending to use older generations of technology. What's your thinking about AI and how it might level the playing field?

I actually started one of Silicon Valley's early for-profit AI companies a long time ago—we were working in character recognition, which was then the leading edge and called machine learning at the time. Our particular breakthrough was being the first in the OCR field to use a database of millions of character examples to train the AI, rather than trying to design the algorithm from out of our brains.

I've spent over 30 years bringing AI to the nonprofit sector, and my number one piece of advice to nonprofits is: Don't build an AI product. It's hard and expensive. To create a product, you need a fair amount of data, which a lot of nonprofits don't have. Over five years, you're going to be spending seven figures, whatever your major currency is. That's not what a million dollar nonprofit should be doing.

Even though I'm a complete enthusiast for AI—it's been my career, especially bringing AI to social good—I spend a lot of my time talking people out of AI because right now the hype cycle is so powerful that people think it's this magic wand. They think they can lay off half their staff and everything will be great. And I’m like “no, don't do that!”

Last month I published something called the “Nonprofit AI Treasure Map." It's a single chart about how to find treasure with AI. The short answer is, if there's a product: Try it. If it helps you far more than it hurts you: Use it. If not, wait a year and check again, because the dynamics could have changed. That's what 99% of nonprofits should be doing.

Now, there are maybe a couple hundred nonprofits that have tech teams, know a lot about data, have a lot of data, and they're doing really exciting stuff with AI. Some are pioneering within their organizations. Look at Khan Academy with the Khanmigo AI tutor. Will it work? I don’t know but it’s a bold experiment worth trying. Could a kid who could never pay for a tutor get an AI tutor that actually helps them significantly? Maybe, let's try that.

Digital Green in India is doing agricultural advisory for farmers really well. MomConnect in South Africa focuses on maternal health advice, using AI to answer 20-60% of their questions with canned answers because, frankly, many questions from pregnant women are similar, and you can provide vetted, correct answers.

These are all great applications. They're all about making the humans in the system more effective and powerful, reserving human interaction for the more challenging needs rather than routine ones. AI is great at eliminating drudgery. But if you're going to invest a million dollars or more, you need to be eliminating enough drudgery to justify that investment.

Nonprofits have to justify their technology investments either through cost savings or massive expansion in the impact of their services—effectively lowering the cost of delivering a unit of social good. That's what technology is for. How can we be 30% more efficient in two years with this technology? How can we take this one task and make it cost a quarter of what it costs now?

I often joke that your nonprofit should not be writing software any more than your corner restaurant should be writing software. Wait until there's a product that actually solves your problem—one that solves the problem for 5,000 or 50,000 other organizations like you. That product is likely to be good, maintained, and improved over time, and affordable!

Yeah, but it's so interesting, right? Because it's always the build versus buy for any business with what they're trying to do and that kind of quality off-the-shelf software to meet the goals of whatever that the organization is trying to do is so much more cost effective than it was 15 years ago.

And then if there's one place where you go “oh my god, we have to do something much, much better than the status quo products,” that's just one place rather than trying to do 15 things simultaneously.

You can buy or rent, 13 or 14 of those, and if you really have something that's important, well then all you have to do is focus on that one missing piece that maybe what your effort turns on as opposed to basic things like security and servers and managing loads. People solve those problems for you and it's affordable.

A common criticism of using AI—or crypto, which you mentioned earlier—for moral good is that those technologies themselves have a very real and negative environmental impact. It would be fascinating to hear your outlook on that whole discussion.

My view is that we're usually using technology for harmful purposes before we use it for good ones. The ethical dilemma is often "why aren't we using it for good?" Usually, the reason is simple: I can't make enough money doing that, but I can make a lot selling technology to the oil and gas industry or whatever. People can use generative AI to create deep fake porn, for instance. So my challenge isn't defending technology: It's acknowledging that while technology is being used for something harmful here, it could be used for something beneficial there.

That's actually why I got into AI in the first place. As a grad student, we were learning how to make smart missiles with camera systems in the nose that could recognize targets. When I was in grad school, most of my peers went to work for the military-industrial complex. I went back to my dorm wondering if there was a more socially beneficial application for pattern recognition than smart bombs, and thought, "Hey, you could use this to help blind people read."

That's where my career started. First building the technology as a venture-backed startup, raising $25 million in Silicon Valley. And when my investors vetoed the product for blind people, I said, "Well, I'm going to do it anyway," and made reading machines as a nonprofit. That worked. So I'm always looking at the leading edge and trying to figure out how to adapt it for good.

Now, about the environmental impact of AI: We’re running into an issue where our arms race in the AI field is causing us to keep burning more coal. We can't get enough energy fast enough. However, because the social good field is lagging behind, we're actually in a different position. People ask us how we can store so much data for free. Well, poor people and small NGOs don’t have very much data. I'm not trying to be a Meta or Amazon or Google or Apple.

In many ways, the most energy-intensive uses aren't going to be important in the social good sector. We can use cheap, thin models that are very computationally efficient that we can be running. We're not going to run the most expensive model a thousand iterations to get the right answer.

I can give you two examples. In Terraso, our most advanced AI application is soil identification. By making maybe 15 or 20 measurements without a lab—just dig, take four measurements, go deeper, take four more, see how much rock there is—we have a little AI algorithm that tells you the soil type. If we can tell you the soil type, you know what will grow well on that hillside. That's really important, but it runs on a smartphone! I'm not worried about the energy that little AI algorithm uses.

For Aselo, one of the best AI applications is analyzing chats. That's kind of the slam-dunk thing. The bad idea would be to fire the humans and put a chatbot on the line—no empathy, no understanding, might accidentally cause someone to commit suicide. We've had a couple of news stories about that in the last few months. So don't do that.

Instead, we use AI to understand what conversations are about—is it about bullying or assault or divorce—it's pretty straightforward stuff as far as an AI use case goes and not computationally intensive. An increasing number of these helplines in different countries have enough data to run models without using LLMs at all. If you don't have much data, you might need an LLM initially, but you could probably build a simple model that runs inexpensively once you figure it out. Use the expensive LLM for development, but when you go to scale, use something cheaper.

So while we do have a climate issue around big AI, I don't think nonprofit use cases are going to be pushing the envelope in terms of being a significant part of that problem.

You’ve said elsewhere that 95% of the apps you’ve seen created for nonprofits fail. Why do you think that is?

Being in Silicon Valley, I saw the app craze about 15 to 18 years ago. Investors did what they always do: They poured lots of money into lots of companies, and then there were winners and losers. People figured out what apps were good for, what they weren't good for, and which apps worked. Some markets got locked up by incumbents, making it very hard to displace them.

But the nonprofit sector, to pick apps as an example, doesn't get that memo. They're not doing it for business reasons, and they're often advised by people who think apps are still a thing. So I usually find myself talking people out of building an app because they've been told they need one, not because they actually have a use case for one.

Here's a classic example: With Aselo, our helpline software, the founder of the movement—who isn't technical—got a strategy plan from a management consulting company for the entire child helpline movement in 140 countries. Half of it was about an app. The founder came to me and said, "Jim, you're a nerd I trust—what do you think?" I told them I didn't think they had an app use case.

People call emergency numbers like 911 in the US because it's well-socialized and they need help immediately. Your use case isn't someone experiencing a dangerous situation thinking, "Let me pull out my smartphone, go to the Google Play Store, download an app, set it up, and then report an assault." That doesn't happen.

Yet nonprofits keep getting bad tech advice. Maybe it's from a fresh MBA grad given an assignment by their boss, pulling down a 10-year-old playbook about apps being great. I joke that most of the time when I talk to nonprofit leaders about their tech idea, it's usually anti-consulting.

In my book, there's a chapter called "The Five Bad Ideas That Everybody Tries": An app that no one will download; a gigantic database in the sky that people won't use because they have search engines; blockchain or whatever the latest trend is. Eight years after the initial blockchain hype, I have yet to find a single nonprofit using blockchain effectively for social change at scale. Blockchain is like 0 for 1,000. But with AI, every wave brings something cool once we figure out what it's good for.

I think it comes down to this: If you're financially motivated, investors or your company's investment committee won't invest in a product that doesn't make sense or goes against what everyone understands doesn't work. But nonprofits don't necessarily have that intelligence.

That's part of my job—though I'm not really a consultant, I'm trying to build a couple of products here! But I spend a lot of my time advising people to not start with the technology, but to start with their problem and identify where it's really painful.

If I was doing a startup, I'd go to Y Combinator and work on product-market fit. But if you're doing technology in the social good sector, what you should be working on is product-user fit. If you haven’t got it, nothing else matters!

Once you’ve completed one of these excellent tech initiatives, how do you get the adoption from end users?

That's the number one problem—distribution, implementation, adoption. It's not the tech. The tech industry knows we can build anything, but getting people to use it is another issue entirely.

There are several models we use in the field. First, while you're building the tech, you try to do more human-centered design to find out near the beginning that your product is completely unusable, rather than after you've spent all your budget. Let's assume you've built a product that's usable by your early adopters, and now you're expanding to people who aren't early adopters. You get a rude awakening that they're very used to using the telephone, or collecting data on paper or in spreadsheets, and now you're trying to get them to adapt to a SaaS platform or use a smartphone to collect data.

Like many developers, we don't scale. If we want to have 50,000 users, we're never going to be able to do that directly with a team of 15. So early on, we overinvest in early adopters, providing uneconomic levels of support to get insights, assuming that early adopter represents hundreds of customers in two years or thousands. We need to understand their problems and make sure we're solving them.

For distribution, we're always looking for existing channels we can use. In conservation work, it might be big orgs like UNDP, because none of the big nonprofits are particularly good at software development. We're not writing software for big NGOs to use themselves—we're helping their farmers. So we train the trainers, which is a very standard approach. In tech terms, we don't want to be front-level support for our customers—we want to be second or third level support as a product maker—because many of our partners have infrastructure for training and support that we can leverage without recreating it.

Depending on your product's maturity level, you end up spending more and more time trying to make adoption easier and smoother. Even though we know that rural people in developing countries aren't as technically empowered as people in rich country cities, we're often really reminded of this when we deploy a product and realize, "Oh, we thought they would know how to do this thing on their device, and they don't." So we have to add an even more basic level of training than we assumed.

Take our work with national child helplines around the world. During the peak of the pandemic, we were doing everything virtually, and it just wasn't working well. We had underestimated the tech skill level of the average counselor in a child helpline in Africa. We ended up hiring software engineers in South Africa and sending them to Zambia, our first country, so we could actually see what was happening.

There are also cultural norms that make it very hard for people to tell you the blunt truth about your product. In South Asia, for example, they're often too polite and will get more and more frustrated without telling you. You have to go out of your way to solicit feedback because if you wait for the squeaky wheel, people may be exploding in frustration before you've gotten wind that they're unhappy with your product. So we never launch in a country without visiting and spending time with the frontline staff and planning for change management.

For individuals, you can't afford that approach. Instead, you might go to a major conference in that area and talk to as many users as possible in one go. We can afford to do that even if we can't talk to 300 farmers individually—if 300 of them show up at an event, we can have 25 meaningful conversations.

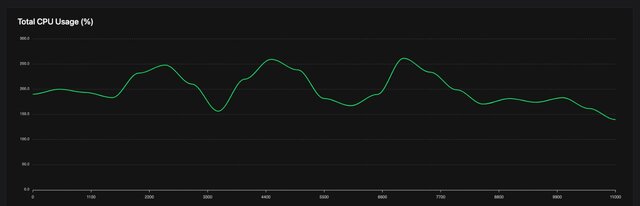

We also use tools like Fullstory, which blots out all the actual data entry fields but lets you watch what your users are doing. If they keep clicking on something that's not clickable, you've got a product design problem. There are ways to gather insights without violating ethics or personal information. I'm always wondering how we can automate seeing what's happening with our users to understand what's working without violating privacy. It's not always possible, but when it is, it's usually smart. Of course, if you're a big tech platform, this is second nature—you're watching everything.

We know you have a book coming out. What else are you most excited about in the next six to 12 months?

We're planning to launch a data governance initiative called the Better Deal for Data. Nonprofits and most businesses want to do the right thing with the data of the people they serve, and that doesn't involve selling it to Meta or similar companies.

The Better Deal for Data is based on eight simple principles, modeled after the open source initiative, but for private data. The core concepts are straightforward: It's your data, not ours. We'll delete it, correct it, or transfer it somewhere if you want us to. If we hold it, we'll secure and encrypt it. We won't sell it. We'll use it for your good and the good of the planet. If we do research with it, we'll give you open access.

I'm really excited about this because nonprofits are looking for something like this. We've spent about two years looking for a lightweight approach to private data. Yes, we have regulations like GDPR in Europe, which are great, but this goes beyond that. We soft-launched it at Oxford this spring, and now we're gathering feedback. We're seeing a lot of excitement because data is important, and existing data sets are not very diverse.

We have to do something different, not only on the tech infrastructure, which is my traditional area of work, but also on the legal and social infrastructure—what are the norms, what's an alternative to surveillance capitalism? I'm not trying to overthrow surveillance capitalism, I just think we need an alternative for mission-based organizations and companies that would rather be trusted by their users than selling them out to third parties.

And is the plan to make that an international initiative?

Definitely. One of our team members was in Kenya last week, gathering use cases from about 25 people on the ground, ranging from farmers to local leaders. Our work on data governance was actually inspired by a conversation I had five years ago in Tanzania, where someone explained data colonialism to me. As a guy from Silicon Valley, I was dismayed that I'd never heard the term. “You should look this up, Jim!”

When I looked it up, one of the scary things was that while we're used to for-profit companies and governments being somewhat colonial, what was surprising was that the non-profit sector and academia are also very colonial in their approach to data. Part of the Better Deal for Data is not just about avoiding colonial practices, but ensuring data gets used for the benefit of the people it's collected from. I've seen people take data about poor people and sell it to the fintech industry so they can make them poorer. I love data and I but I don’t think that’s the best use of data. That's not the kind of use I want to be promoting.

If you’re a nonprofit interested in partnering with Whitespectre as part of our Green Grants, please get in touch—and let us know how we can help!