You launched an AI assistant. Do you really know how it's performing?

When conversational AI affects retention and conversion, looking at individual responses isn’t enough. We share how we evaluate AI assistants as systems- so teams can understand the full user experience, see where it breaks down, and fix what matters.

Your company's new AI assistant feature launches. The business case was clear, the demo responses looked good, users are excited

Then Customer Support starts flagging customer complaints: 'The bot didn't understand,' 'It ignored part of my question,' 'The answer was wrong

Your team pulls the related session transcripts. They do look poor: users repeating themselves, answers that don't quite make sense, visible frustration in the messages

What's not visible: Why?

The transcript shows the conversation went off track. It doesn't show that:

- the retrieval layer timed out

- the tool call returned yesterday's data

- the 'escalate to CS' logic should have fired two turns earlier

These failures aren't just isolated bad responses. They represent poor session experiences and breakdowns between system components.

The problem? Too many teams creating AI assistants stop evaluating after the individual response. But in our experience, the issues that erode user trust rarely live just at the level of a single turn.

In this article, we lay out our framework for evaluation that works at three levels: individual turns, full sessions, and cohort-level trends.

We'll cover how to define what "good" looks like for your specific context, how to build observability that surfaces the patterns you'd otherwise miss, tracing problems across the system, and how to link feature performance to business outcomes.

Our approach:

- Evaluation defines what good looks like and measures against it.

- Observability captures what actually happened—across the model, retrieval, and tooling.

- Traceability connects failures to specific causes so you can fix them.

Without all three, you might be able to detect issues but you can't explain or fix them.

We experienced this firsthand with our WhatLingo app. We had a tricky WhatsApp linking flow that our analytics showed was failing, users were dropping over a few specific steps.

The data told us what was happening, but not why. We needed to watch users struggle through the flow and hear their frustrations in real-time. But doing user outreach, scheduling interviews, coordinating calendars, and setting up screen sharing for what should have been a 10-minute conversation? There had to be a better way.

So we have decided to test out Voice AI research to see if we can address some of the core blockers that make teams put off user research:

- User schedule & geographic limitations: Users can engage when convenient for them, not when slots are available

- Time in the calendar bottlenecks: One researcher can only conduct so many interviews a day to a good level.

- Analysis delays: We can do analysis as each interview comes in and then us AI to find patterns, rather than having to do it all in one block like with traditional interviews

- Cost barriers: Each traditional interview costs time, money, and coordination overhead

We believe that by automating as much as possible on the ‘admin’ side of the user research, it will allow us to focus on the human-necessary part, reviewing the transcript and creating the analysis.

The AI dynamic can change how people respond. Some users might actually be more honest with AI researchers. There's no one’s feelings to worry about, no judgement to navigate. It's not without its flaws; some people feel uncomfortable being recorded, while others find AI interactions strange or impersonal. This isn't a universal solution, it's an additional tool that works for some users and some research contexts.

Our voice AI researcher, Emily, isn't perfect. We’ve noticed she sometimes over-clarified responses and could feel repetitive. There were technical hiccups and moments that felt distinctly artificial.

But here's what matters, Emily can be available whenever a user wants to give us feedback. Even an imperfect AI conversation can generate insights that written forms never would have captured (because the users probably wouldn’t fill them out).

This reflects a fundamental truth about AI development, it's probabilistic, not deterministic. Emily gets better through real conversations with real users, we all learn from each interaction.

The real breakthrough is just as operational as it is technical. Voice AI research allows product teams to automate the logistics of user interviews while preserving the insights that only conversation can provide.

Imagine conducting user interviews at scale, gathering insights without constraints. The compound effect on product decisions becomes enormous when user insights flow as freely as prototypes. You can use this alongside your human-strategic knowledge, data points and internal research to make better decisions.

The companies winning in today's market aren't those with perfect research processes, or those waiting for the AI to do everything. They're those with consistent research habits. Ideally, we could remove enough friction that gathering user insights becomes as routine as checking analytics.

It's about making "some research" possible when normally there would be no research at all.

The question isn't whether AI can conduct research as well as humans. The question is whether you can afford to keep making decisions without doing user research.

1. Evaluate at three levels: turn, session, cohort

Most teams evaluate at a single level. That catches some issues, misses many others.

Turn-level: Individual exchanges

A single back-and-forth between the user and the interface. This level is ideal for what we think of as continuous design—the ongoing work of refining the assistant before and after launch as models, tools, and data sources evolve.

We evaluate:

- Prompt and instruction tuning

- Retrieval accuracy and failure detection

- Tool-call success, latency, and partial failures

- Response quality, tone, refusal style, and formatting

- Model routing, fallback behavior, and cost–latency tradeoffs

This is where teams often encounter surprises when underlying models change. On one project, a model upgrade produced responses that were noticeably “friendlier.” That sounds positive- until we realized our client’s assistant now felt less authoritative in a context where trust mattered more than warmth. We had to adjust underlying prompts to get back to the right balance.

Importantly, turn-level evaluation must extend beyond text quality.

With another of our clients where we were brought in to improve the experience, we found that the model’s responses were consistently accurate and empathetic. However, 18% of sessions “failed” because a downstream tool timed out. Users experienced this as the assistant “ignoring” part of their request.

Session-level: the user journey

A session captures the full interaction as a user tries to accomplish a goal across multiple turns. This is where correct answers either add up to a useful experience—or don't.

Turn-level evaluation is about accuracy and tone. Session-level evaluation is about usefulness.

Session-level evaluation reveals:

- Whether the user's intent was actually resolved

- Where users loop, stall, or abandon

- Whether clarifying questions were asked at the right time

- When escalation should have happened—and didn't

- Session length, retries, and token cost as indicators of friction

One hard-learned lesson: session success must be defined before launch. When it isn't, teams unconsciously optimize for metrics that make releases look good rather than experiences that actually work. Case in point- Longer session length does not necessarily mean users are more engaged or likely to hold onto that AI plus subscription. Instead, it could mean they’re confused and struggling to get answers. As another example, higher support deflection does not guarantee that customer problems are getting resolved.These dynamics are familiar to anyone who has watched OKRs drift away from user reality.

Cohort-level: patterns over time

Cohort-level evaluation aggregates behavior across user segments, time windows, releases, and configurations.

It answers questions like:

- Who is the AI interface actually serving—and for what tasks?

- Did quality improve or regress after a release or model change?

- Are failures isolated or systemic?

- Are safety or policy issues concentrated in specific flows?

- Do users who engage with the AI behave differently in terms of retention or support?

This is where evaluation answers strategic questions. Cohort analysis shows where conversational interfaces create real value - or where they quietly introduce serious liability..

Why this matters for traceability:

- Turn-level shows what happened

- Session-level shows how it affected the journey

- Cohort-level shows how widespread the issue is

Together, they form the backbone of observability.

A common reason these signals go unaddressed is ownership fragmentation. Product teams own UX, ML teams own models, platform teams own tooling, and support teams own escalations. Evaluation spans all of these—but often belongs to none. When evaluation is cross-functional without being explicitly accountable, observability becomes descriptive rather than corrective.

2. Measure quality with CORE + CUSTOM dimensions

To make evaluation reusable and explainable, we separate quality into two layers.

CORE dimensions: baseline trust

These apply across products and industries:

- Clarity – Simple, structured, easy to follow

- Relevance – Directly addresses the user's question

- Tone – Professional, trust-building, brand-aligned

- Accuracy – Factually correct, aligned with official sources

- Guidance and actionability – User can act immediately on the answer

- Conversation flow – Natural, well-paced, adaptive

- Boundary adherence and safety – On scope, handles off-topic gracefully

- Responsiveness and reliability – Fast, consistent, available

These establish baseline trust.

CUSTOM dimensions: product-specific expectations

Every conversational interface has expectations that go beyond baseline quality. Custom dimensions capture what would constitute a true product failure—even if everything looks acceptable on paper.

For a coaching assistant we built, custom dimensions included:

- Methodology adherence – Does it faithfully follow the client's official training materials?

- Appropriate escalation – Does it progress step-by-step and flag when human support is needed?

- Encouragement and personal touch – Does it provide the motivational support central to the experience?

This CORE + CUSTOM split keeps evaluation portable without diluting rigor.

3. Scale with weighted scoring and hybrid review

Weighted scoring

Not all dimensions matter equally. Weighting forces teams to confront real tradeoffs. In some contexts, a faster, cheaper response that resolves the task is better than a slower, more nuanced answer. In other cases, correctness or safety must dominate even if it means slower response times and higher token usage. These tradeoffs must be made as explicit product decisions and shared with stakeholders.

Hybrid review pipeline

We pair weighted scoring with a three-layer review process:

- Manual reviews – Establish gold standards, calibrate scoring, handle edge cases

- Automated reviews (LLM-as-judge) – Expand coverage across turns, sessions, and cohorts

- Synthesis – Track disagreement, flag ambiguous cases, detect drift

Automated evaluation can fail when teams forget that it encodes assumptions—and those assumptions drift silently over time. Judges should be versioned, audited, and reviewed. Automation scales evaluation; it doesn't replace responsibility.

4. Connect evaluation to observability data

Evaluation becomes operational when paired with telemetry.

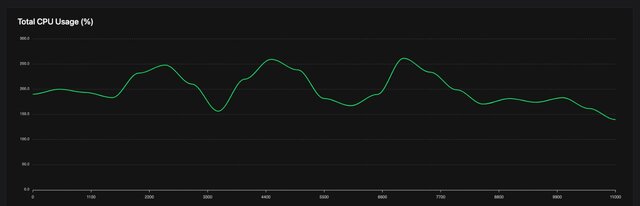

We instrument signals like:

- Exchanges per session

- Common intents and queries

- Tool usage, failures, and latency

- Session cost and retry patterns

- Points where users stall or escalate

This is where observability turns into traceability: you can link quality drops to specific intents, cohorts, releases, or system changes.

It's also essential for post-mortem analysis: being able to show not just what the assistant said, but which system components were involved and which safeguards were triggered or bypassed.

5. Tie quality to outcomes leaders care about

We map evaluation and telemetry to KPIs in three tiers:

- Outcome-oriented: E.g. Task completion, deflection, retention

- Experience-oriented: E.g. Perceived helpfulness, clarity, trust

- Product and strategy: E.g. Insights generation, knowledge gaps, roadmap signals

In mature organizations, no one debates whether financial controls are necessary—they're non-negotiable, auditable, and boring when they work. AI evaluation needs to be treated the same way. When it's absent, the consequences aren't immediately visible, but they're existential once they surface.

How we work with companies on this

Our work is about helping teams surface risks they didn't realize they were carrying, and opportunities for growth or optimization they aren’t seeing

Typically we collaborate to:

- Define CORE and CUSTOM dimensions

- Decide what "session success" actually means

- Instrument logs, intents, and tool execution

- Run hybrid reviews on a regular cadence

- Track cohort-level trends consistently

- Review KPIs and surface issues or roadmap opportunities

And these are the key shifts:

- Don't just evaluate answers—evaluate sessions and patterns across users

- Treat AI assistants and conversational interfaces as systems, not models

- Weight dimensions by what actually matters to your product - cost, accuracy, safety

- Use automation to scale, but let human judgment win

- Connect conversation quality to business outcomes

That's what turns "it seems to work" into something you can measure, trace, and fix.